Since May 2025, UK Export Finance (UKEF) has been trialling 30 Microsoft 365 Copilot licences, distributed across a range of staff. These participants have been trying out the AI product in their day-to-day work and recording their experiences.

What did we want to learn?

Our goal was to understand trial participants’ experience of using M365 Copilot for two months in their day-to-day work, including:

- How easy they found GenerativeAI (GenAI) to use

- What worked well for them, and - no less importantly - what didn’t

- What benefits it gave them, in terms of time and efficiency savings

- How confident they felt using it

- Their interest in continuing to use it after the trial period

All the data and findings we gathered would be used to help inform a decision on purchasing more M365 Copilot licences in the future and exploring AI’s potential more broadly across UKEF. We also wanted to learn more about the potential training needs of UKEF users for this new technology.

Methodology:

We used a mixed-methods approach, including two surveys (an initial single-entry survey and a repeated behavioural survey), in-depth interviews over Teams, and three short research tasks: a card-sorting activity, a Copilot meta-analysis task using a prompt we sent participants, and a dot voting activity. This allowed us to combine quantitative data with qualitative insights.

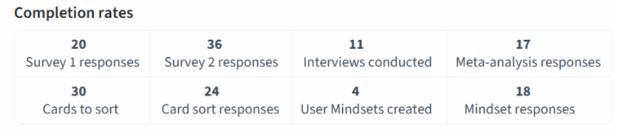

Participant engagement with our research was generally high throughout the trial:

Participants:

The 30 staff were self-selecting and came from all areas of the organisation, including DDaT, Project Management Office, Business Group, Private Office, HR, IT Security and Learning & Development. A number of participants were also members of our Disability, Carers and Neurodiversity Network (D-CAN).

As an aside, a revealing insight about the limitations of GenAI is demonstrated by the image above. Copilot was prompted multiple times to “Create a small image of 30 human beings. Do this as colourful genderless icons in a form that I could use as an image in a slidedeck”. However, it appeared incapable of doing this correctly. On each occasion it produced a grid with only 24 or 25 icons, even when reprompted to correct itself. GenAI’s sporadic inability to count or spell correctly is well-documented: Why Can't AI Count the Number of "R"s in the Word "Strawberry"? | HackerNoon

What did we learn?

- M365 Copilot has delivered productivity benefits across UKEF, particularly in drafting, summarisation, and strategic exploration. Participants appreciated its role in simplifying complex or mundane tasks.

- Confidence in using Copilot grew over time but varied depending on participants’ existing familiarity with AI tools. Prompting was a recurring theme—users who experimented more or received guidance upfront tended to get better and more productive outputs from the tool.

- Some aspects of using the tool proved more problematic. Inconsistent accuracy of some results, the variable formatting of outputs, the tool’s inability to carry out more advanced data analysis were all identified as areas of challenge by some participants.

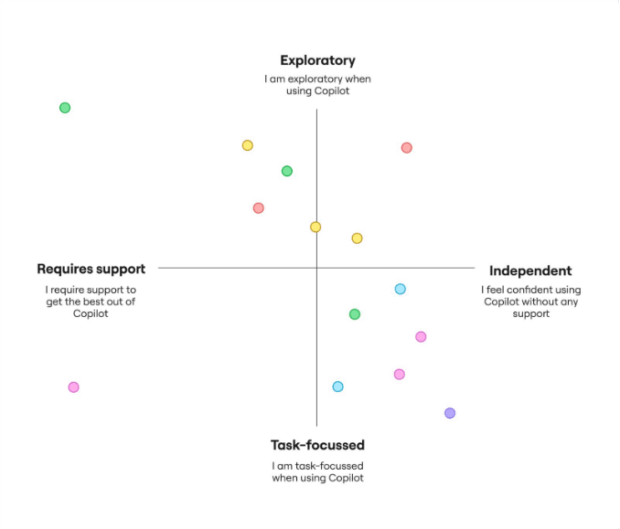

- Usage styles varied across participants. We identified four user ‘mindsets’ from this study which have helped us understand how Copilot fits into different workflows - from users looking to speed up mundane or repetitive tasks, to those aiming for strategic use of the tool as a ‘thought-partner', and from curious collaborators trying out the tool for the first time, to those empowered early adopters seeking to push the boundaries of GenAI in their daily workflow.

- Overall, our research suggest that while Copilot is not yet transformative, it is a valuable and promising tool – especially when paired with user training and realistic expectations.*

*N.B. Much of the above summary was drafted by Copilot itself, based on the qualitative and quantitative research insights gathered during this trial. As ‘humans in the loop’, we nevertheless maintain that it represents an accurate reflection of what we’ve learned.

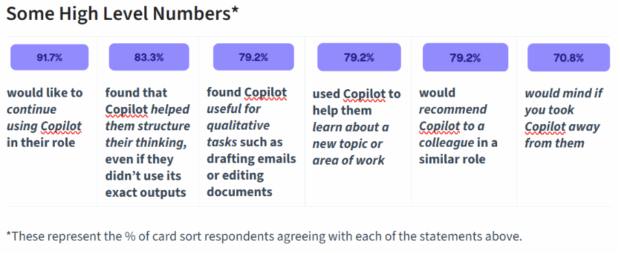

The outcome of the card sorting activity underscores this generally positive view of Copilot by those who took part in our trial.

We also asked participants to rate themselves in terms of the way they use Copilot. More participants rated themselves as being independent users of Copilot than requiring support. A roughly equal proportion rated their usage of Copilot as exploratory as did those who were more task-focussed in their approach to using Copilot.

One insight in particular stood out. We had not anticipated how strongly neurodiverse trial participants would appear to benefit from Copilot. We heard from a number of such trial participants how beneficial Copilot was in allowing them to overcome ‘blank-page syndrome’ and get started with work tasks. One of them even described the tool as a ‘gamechanger’ for their work. This is encouraging and echoes the findings of similar research into Copilot that has been carried out elsewhere.

What does all this mean for UKEF?

The trial has proved extremely beneficial in helping us better understand the potential benefits and limitations of Copilot for UKEF staff at this stage.

Our research has shown that Copilot has real potential to enhance how we work at UKEF – but like any tool, its success depends on how well it’s understood, supported, and integrated into our daily routines, something which we are now working to develop.

Based on this trial, we are therefore recommending that UKEF continues to:

- Expand Training: Develops tailored learning pathways and peer-led support to help users get the most from Copilot.

- Refine Use Cases: Focuses on high-impact tasks where Copilot adds clear value, such as strategic drafting, the automation of more mundane tasks, and data analysis.

- Create Ongoing Feedback Loops: Continues gathering user insights to shape future iterations and ensure Copilot usage evolves with user needs.

- Continue to ‘horizon scan’ the landscape of AI: To allow exploration of new models/tools, run internal experiments and continue to upskill the department in knowledge and training as the technology evolves.

Leave a comment